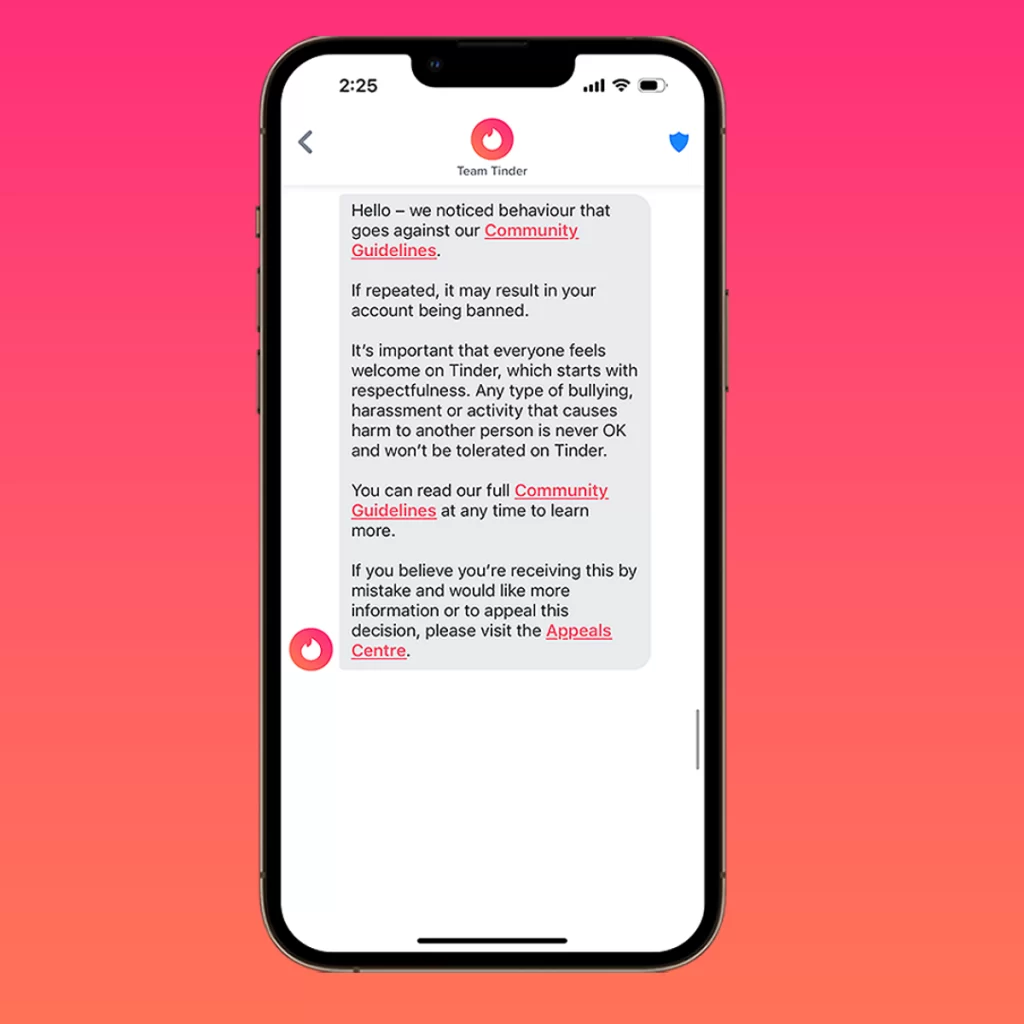

Tinder Implements Warning System to Curb Inappropriate Behavior

By DaMarko Webster

Tinder, the ubiquitous dating app, is intensifying its efforts to curb unsavory behavior among its users. In a move aimed at fostering a safer and more respectful online environment, the platform is implementing a stringent warning system. This system, poised to launch in the coming weeks, signals a proactive stance by the app in thwarting inappropriate and offensive interactions.

At the heart of this initiative lies a three-tiered warning framework, designed to uphold principles of authenticity, respectfulness, and inclusivity. Users engaging in conversations that veer off this prescribed path risk receiving prompts such as “Does This Bother You” and “Are You Sure,” courtesy of a machine-learning algorithm deeply embedded within the app’s infrastructure.

The crackdown targets a spectrum of transgressions, ranging from harassment to impersonation and even ventures into the murky realm of online solicitation. Notably, Tinder appears to be sharpening its focus on rooting out instances of “advertising,” a euphemism perhaps for the promotion of sexual services within the platform.

With the implementation of this warning system, Tinder aims to shift the onus onto its users, reminding them to exercise discretion and uphold community standards during their interactions. Upon receiving a warning, users will find themselves confronted within the very chat where the offense occurred, serving as a tangible reminder of the repercussions of their actions.

For repeat offenders, the consequences escalate swiftly, with the looming threat of being ousted from the platform altogether. By employing a combination of technological prowess and human oversight, Tinder is taking decisive steps towards fostering a more respectful digital landscape for its diverse user base. As the rollout of this initiative looms on the horizon, it signals a watershed moment in the platform’s ongoing evolution towards a safer and more inclusive online dating experience.