The Infrastructure Behind Intelligence

By Brian K. Neal

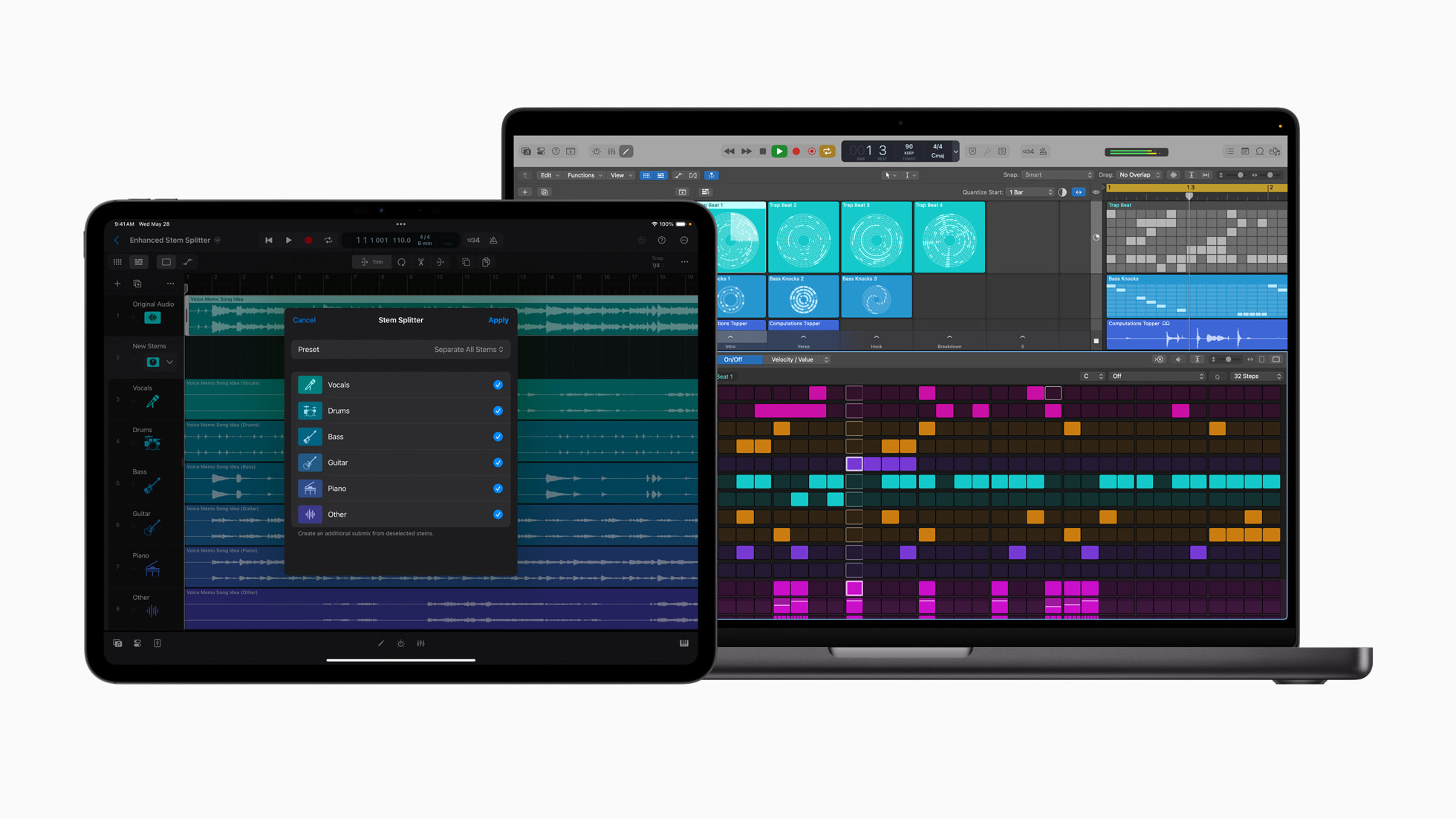

Artificial intelligence was introduced to the public as frictionless power. A tool that collapses time, accelerates output, and distributes capability at unprecedented scale. Intelligence felt portable. Immediate. Weightless. For a moment, it seemed as though the interface itself was the revolution.

But intelligence at scale is never weightless.

Behind every prompt sits infrastructure. Models require memory. Memory requires fabrication. Fabrication requires allocation. As AI systems expand in size and utility, so does their demand for high-bandwidth memory, enterprise GPUs, and dense storage architecture. Silicon is not abstract. It is manufactured, prioritized, and deployed according to demand.

The rapid expansion of AI data centers—facilities built to train and run increasingly large models—has intensified that demand. These environments operate at a scale measured in racks, not devices. Thousands of memory modules. High-bandwidth configurations engineered for sustained computation. When enterprise infrastructure scales at that level, manufacturers naturally direct production toward those contracts. Not out of conspiracy, but physics. Capacity flows where utilization and margin are clearest.

For the everyday user, the interface remains smooth. Cloud platforms absorb the friction. Subscription tiers replace ownership. Processing power becomes something accessed rather than possessed. AI continues to feel abundant.

Yet under that surface, a structural shift is underway.

As enterprise demand concentrates advanced memory and compute resources upward, access becomes tiered less by ideology and more by infrastructure. Local experimentation—running large models independently, prototyping without cloud reliance, iterating without usage meters—requires deliberate hardware investment. Not because intelligence is scarce, but because the hardware that enables autonomy is prioritized strategically.

This is not a crisis. It is a reordering.

The story is not that consumer prices are destined to spike overnight. Markets adjust. Production expands. Cycles balance. The deeper observation is subtler: intelligence now carries physical requirements that were previously invisible to most people. And infrastructure has always been the quiet lever that organizes participation.

Creative velocity is increasingly linked to compute capacity. Ownership of hardware becomes a form of independence. Dependence on remote processing introduces another layer of structure. The divide is not between those who use AI and those who do not. It is between those who control their stack and those who operate entirely within rented architecture.

Artificial intelligence is expanding possibility at an extraordinary rate. That expansion is real. But possibility does not float. It rests on memory bandwidth, fabrication timelines, allocation strategy, and supply chains that do not scale infinitely. When infrastructure shifts, power shifts first. Culture tends to recognize it later.

The interface feels democratic.

The infrastructure is directional.

Intelligence remains abundant.

But intelligence, increasingly, has requirements.

POST COMMENT